“`html

In a time where AI assistants like ChatGPT and Claude prevail in cloud ecosystems, risking user data to remote invasions, a novel Rust-based utility known as LocalGPT offers a fortress-like alternative.

Created as a single ~27MB executable, LocalGPT operates entirely on local devices, preserving sensitive information and tasks from cloud exposure. Motivated by and compatible with the OpenClaw framework, it highlights persistent memory, autonomous functionality, and minimal prerequisites, distinguishing it as a cybersecurity champion for businesses and privacy-aware individuals.

The memory safety architecture of Rust is central to LocalGPT, removing frequent vulnerabilities including buffer overflows that trouble C/C++ AI tools. Omission of Node.js, Docker, or Python results in a minimized attack surface, eliminating package manager vulnerabilities and container breaches.

“Your information remains yours,” the project’s GitHub readme asserts, with all processing limited to the user’s device. This local-first approach thwarts man-in-the-middle assaults and risks of data exfiltration typical in SaaS AI.

LocalGPT Security Characteristics

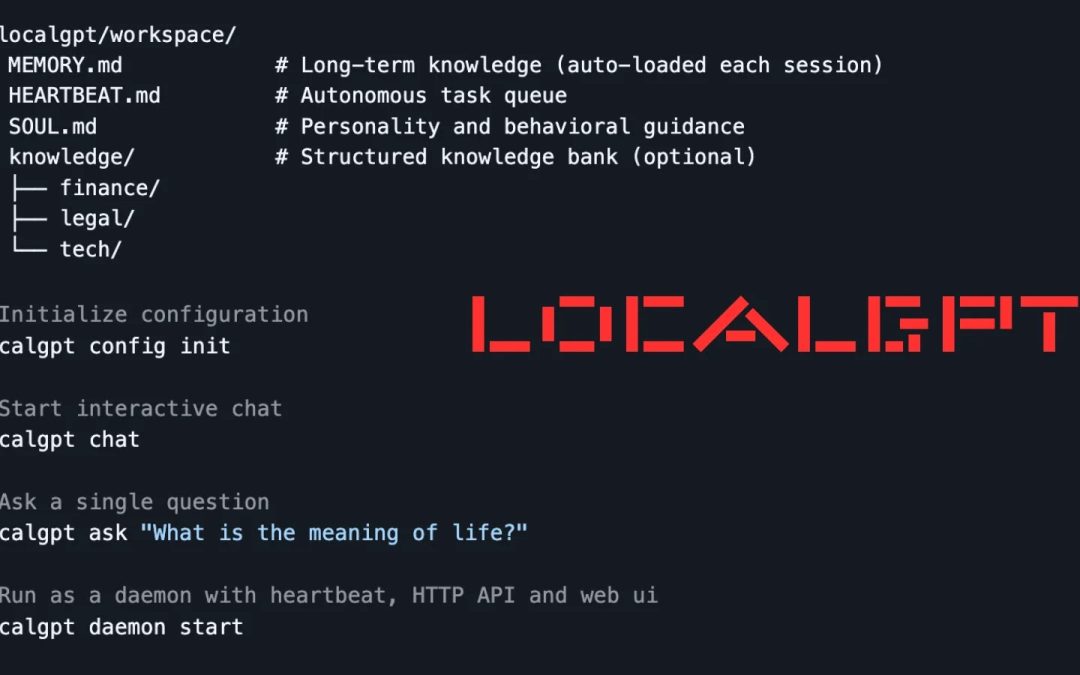

LocalGPT’s enduring memory utilizes plain Markdown documents located in ~/.localgpt/workspace/: MEMORY.md for reservoir knowledge, HEARTBEAT.md for task listings, SOUL.md for personality directives, and a knowledge/ folder for organized data.

These are indexed using SQLite FTS5 for rapid full-text examinations and sqlite-vec for semantic queries employing local embeddings from fastembed. No external databases or cloud synchronization—mitigating persistence-associated threats.

Autonomous “heartbeat” feature allows users to assign background tasks during user-defined active intervals (e.g., 09:00–22:00), with a default 30-minute interval. This alleviates routine tasks without oversight while remaining local to thwart lateral movements by malware.

Multi-provider compatibility features Anthropic (Claude), OpenAI, and Ollama, adjustable via ~/.localgpt/config.toml with API keys for hybrid configurations. However, core functionalities stay device-specific.

Installation is remarkably straightforward: cargo install localgpt. Quick-start commands encompass localgpt config init for initialization, localgpt chat for interactive dialogues, or localgpt ask "What is the purpose of life?" for one-off queries.

Daemon mode (localgpt daemon start) initiates a background service with HTTP API endpoints such as /api/chat for integrations and /api/memory/search?q= for secure queries.

CLI commands encompass daemon administration (start/stop/status), memory operations (search/reindex/stats), and configuration viewing. A web UI and desktop GUI (via eframe) provide user-friendly interfaces. Crafted with Tokio for asynchronous efficacy, Axum for the API server, and SQLite extensions, it’s optimized for low-resource settings.

LocalGPT’s compatibility with OpenClaw includes SOUL, MEMORY, HEARTBEAT files, and skills, facilitating modular, auditable extensions devoid of vendor dependency.

Security analysts commend its SQLite-backed indexing as resistant to tampering, making it ideal for air-gapped forensic use or classified operations. In red-team environments, its simplicity complicates reverse-engineering.

With AI phishing and prompt-injection attacks skyrocketing (up 300% in 2025 as per MITRE), LocalGPT presents a fortified baseline. Early adopters in finance and legal sectors highlight its knowledge/ silos as effective in preventing cross-contamination and information leaks.

Although not completely immune to LLM hallucinations or local vulnerabilities, LocalGPT asserts AI autonomy from major tech corporations. The tool can be acquired from GitHub to enhance your workflow today.

“`